ResNet을 Pytorch를 활용하여 구현하고자 한다.

https://arsetstudium.tistory.com/45에서 공부한 내용을 토대로 구현보면 아래와 같다.

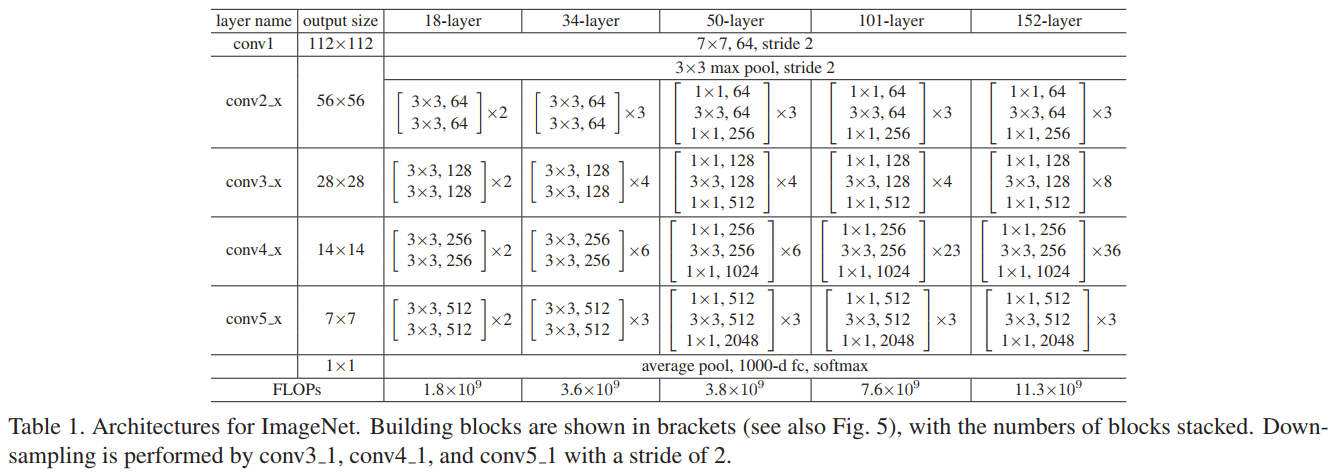

ResNet 구조는 여러가지인데 18, 34, 50, 101, 152 layers다.

VGGNet처럼 ResNet도 블록 단위로 구분할 수 있다.

블록단위로 구현하고 또 다양한 수의 레이어를 적용할 수 있도록 작성하고자 한다.

우선 Block을 만들기 위한 Sub-block 코드다.

Table 1에서 conv2 block에서 개별 conv2_x를 만드는 코드다.

# Sub-block for 18, 34 layers

class ResNetTwoSubBlock(nn.Module):

def __init__(self, in_features, out_features, start=False):

super().__init__()

if start == False:

self.conv_sub_block = nn.Sequential(

nn.Conv2d(in_features, out_features, kernel_size = 3, padding = 1),

nn.ReLU(),

nn.Conv2d(out_features, out_features, kernel_size = 3, padding = 1),

nn.ReLU()

)

else:

self.conv_sub_block = nn.Sequential(

nn.Conv2d(in_features, out_features, kernel_size = 3, stride = 2, padding = 1),

nn.ReLU(),

nn.Conv2d(out_features, out_features, kernel_size = 3, padding = 1),

nn.ReLU()

)

def forward(self, x):

y = self.conv_sub_block(x)

return y

# Sub-block for 50, 101, 152 layers

class ResNetThreeSubBlock(nn.Module):

def __init__(self, in_features, mid_features, out_features, start=False):

super().__init__()

if start == False:

self.conv_sub_block = nn.Sequential(

nn.Conv2d(in_features, mid_features, kernel_size = 1, padding = 0),

nn.ReLU(),

nn.Conv2d(mid_features, mid_features, kernel_size = 3, padding = 1),

nn.ReLU(),

nn.Conv2d(mid_features, out_features, kernel_size = 1, padding = 0),

nn.ReLU()

)

else:

self.conv_sub_block = nn.Sequential(

nn.Conv2d(in_features, mid_features, kernel_size = 1, stride = 2, padding = 0),

nn.ReLU(),

nn.Conv2d(mid_features, mid_features, kernel_size = 3, padding = 1),

nn.ReLU(),

nn.Conv2d(mid_features, out_features, kernel_size = 1, padding = 0),

nn.ReLU()

)

def forward(self, x):

y = self.conv_sub_block(x)

return y

위 sub-block을 이용해서 전체 layers를 만드는 코드다.

class ResNet(nn.Module):

def __init__(self, in_features, out_features, num_layers = 34):

super().__init__()

self.num_layers = num_layers

# First Conv Block

# 찍어 보니까 output size 112를 맞추려면 padding이 3이다.

self.conv1 = nn.Sequential(

nn.Conv2d(in_features, 64, kernel_size = 7, stride = 2, padding = 3),

nn.ReLU()

)

# padding size 1

self.max_pool = nn.MaxPool2d(kernel_size = 3, stride = 2, padding=1)

if self.num_layers == 18:

num_loops = [2, 2, 2, 2]

elif self.num_layers in (34, 50):

num_loops = [3, 4, 6, 3]

elif self.num_layers == 101:

num_loops = [3, 4, 23, 3]

elif self.num_layers == 152:

num_loops = [3, 8, 36, 3]

# 18 or 34-layers 구조인 경우

if self.num_layers in (18, 34):

self.conv2_block = self.build_blocks_two(num_loops[0], 64, 64, start=False)

self.conv3_block = self.build_blocks_two(num_loops[1], 64, 128)

self.conv4_block = self.build_blocks_two(num_loops[2], 128, 256)

self.conv5_block = self.build_blocks_two(num_loops[3], 256, 512)

# Transform Layers to connect Residual Connections

self.connect3 = nn.Conv2d(64, 128, kernel_size = 1, stride = 2)

self.connect4 = nn.Conv2d(128, 256, kernel_size = 1, stride = 2)

self.connect5 = nn.Conv2d(256, 512, kernel_size = 1, stride = 2)

# 50 or 101 or 152-layers 구조인 경우

else:

self.conv2_block = self.build_blocks_three(num_loops[0], 64, 64, 256, start=False)

self.conv3_block = self.build_blocks_three(num_loops[1], 256, 128, 512)

self.conv4_block = self.build_blocks_three(num_loops[2], 512, 256, 1024)

self.conv5_block = self.build_blocks_three(num_loops[3], 1024, 512, 2048)

# Transform Layers to connect Residual Connections

self.connect3 = nn.Conv2d(256, 512, kernel_size = 1, stride = 2)

self.connect4 = nn.Conv2d(512, 1024, kernel_size = 1, stride = 2)

self.connect5 = nn.Conv2d(1024, 2048, kernel_size = 1, stride = 2)

# conv2,3,4,5 blocks를 만드는 코드

def build_blocks_two(self, iter_loops, in_channels, out_channels, start=True):

block_list = [ResNetTwoSubBlock(in_channels, out_channels, start=start)]

for i in range(iter_loops-1):

block_list.append(ResNetTwoSubBlock(out_channels, out_channels))

block_list = nn.ModuleList(block_list)

return block_list

def build_blocks_three(self, iter_loops, in_channels, mid_channels, out_channels, start=True):

block_list = [ResNetThreeSubBlock(in_channels, mid_channels, out_channels, start=start)]

for i in range(iter_loops-1):

block_list.append(ResNetThreeSubBlock(out_channels, mid_channels, out_channels))

block_list = nn.ModuleList(block_list)

return block_list

def forward(self, x):

if self.num_layers in (18, 34):

y = self.conv1(x)

y = self.max_pool(y)

# Conv 2 Block

for conv in self.conv2_block:

y = conv(y) + y

# Conv 3 Block

for conv in self.conv3_block:

y = conv(y) + y

# Conv 4 Block

for conv in self.conv4_block:

y = conv(y) + y

# Conv 5 Block

for conv in self.conv5_block:

y = conv(y) + y

return y

중간에 shortcut connection에서 linear transformation으로 시도하려다가 실패하고 torchvision으로 구현된 코드를 참고했다.

그래서 1x1 convolution self.connect 코드로 이미지 사이즈는 보존하되 채널의 수만 변경하려고 시도했다.

18이나 34 레이어 ResNet은 맨 처음 stride 2인 convk_1 layer와만 채널 수를 맞추면 residual connection (= shortcut connection)이 작동한다.

하지만 50이상의 레이어 구조의 경우 stirde 2가 아닌 경우에도 채널 수가 계속 layer input의 채널 수와 계속해서 달라진다.

따라서 ResNet 클래스 내에서 self.connect를 생성하는 것 보다는 sub-block에 만들고, 필요할 때 마다 쓰는게 더 낫다.

torchvision에서 왜 BasicBlock 클래스 내에 self.identity 혹은 self.downsample의 형식으로 만들어서 residual connection에 사용하는지를 이해할 수 있었다.

큰 접근은 틀리지 않았지만 주요 부분들을 모두 torchvision을 참고했기에 별도의 구현은 여기서 멈추고 일종의 모범 답안인 torchvision의 ResNet class를 아래에서 살펴보고 마무리하겠다.

# Conv2, 3, 4, 5 Blocks를 만드는 sub-block

class BasicBlock(nn.Module):

expansion: int = 1

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if groups != 1 or base_width != 64:

raise ValueError("BasicBlock only supports groups=1 and base_width=64")

if dilation > 1:

raise NotImplementedError("Dilation > 1 not supported in BasicBlock")

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

# 원래의 ResNet과 다르게 conv2가 아닌 conv1에 stride를 설정

class Bottleneck(nn.Module):

# Bottleneck in torchvision places the stride for downsampling at 3x3 convolution(self.conv2)

# while original implementation places the stride at the first 1x1 convolution(self.conv1)

# according to "Deep residual learning for image recognition" https://arxiv.org/abs/1512.03385.

# This variant is also known as ResNet V1.5 and improves accuracy according to

# https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch.

expansion: int = 4

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

width = int(planes * (base_width / 64.0)) * groups

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv1x1(inplanes, width)

self.bn1 = norm_layer(width)

self.conv2 = conv3x3(width, width, stride, groups, dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1x1(width, planes * self.expansion)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(

self,

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

num_classes: int = 1000,

zero_init_residual: bool = False,

groups: int = 1,

width_per_group: int = 64,

replace_stride_with_dilation: Optional[List[bool]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

_log_api_usage_once(self)

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64

self.dilation = 1

if replace_stride_with_dilation is None:

# each element in the tuple indicates if we should replace

# the 2x2 stride with a dilated convolution instead

replace_stride_with_dilation = [False, False, False]

if len(replace_stride_with_dilation) != 3:

raise ValueError(

"replace_stride_with_dilation should be None "

f"or a 3-element tuple, got {replace_stride_with_dilation}"

)

self.groups = groups

self.base_width = width_per_group

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2, dilate=replace_stride_with_dilation[0])

self.layer3 = self._make_layer(block, 256, layers[2], stride=2, dilate=replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block, 512, layers[3], stride=2, dilate=replace_stride_with_dilation[2])

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out", nonlinearity="relu")

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

# Zero-initialize the last BN in each residual branch,

# so that the residual branch starts with zeros, and each residual block behaves like an identity.

# This improves the model by 0.2~0.3% according to https://arxiv.org/abs/1706.02677

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck) and m.bn3.weight is not None:

nn.init.constant_(m.bn3.weight, 0) # type: ignore[arg-type]

elif isinstance(m, BasicBlock) and m.bn2.weight is not None:

nn.init.constant_(m.bn2.weight, 0) # type: ignore[arg-type]

# Sub-blocks = BasicBlock을 이용해서 Convk Block을 생성

def _make_layer(

self,

# 오리지널 ResNet대로 갈지, Bottleneck으로 갈지 결정

block: Type[Union[BasicBlock, Bottleneck]],

planes: int,

blocks: int,

stride: int = 1,

dilate: bool = False,

) -> nn.Sequential:

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = 1

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

norm_layer(planes * block.expansion),

)

layers = []

# 논문에서 표기한대로 맨 처음에 stirde 2와

# residual connection을 위한 downsample 추가

layers.append(

block(

self.inplanes, planes, stride, downsample, self.groups, self.base_width, previous_dilation, norm_layer

)

)

# stride 1이고 downsample이 없다

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

layers.append(

block(

self.inplanes,

planes,

groups=self.groups,

base_width=self.base_width,

dilation=self.dilation,

norm_layer=norm_layer,

)

)

return nn.Sequential(*layers)

def _forward_impl(self, x: Tensor) -> Tensor:

# See note [TorchScript super()]

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

# Conv 2, 3, 4, 5 Block을 생성

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

# Classificaiton Layers

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def forward(self, x: Tensor) -> Tensor:

return self._forward_impl(x)

References:

https://github.com/pytorch/vision/blob/main/torchvision/models/resnet.py#L59

'Computer Vision' 카테고리의 다른 글

| Faster R-CNN (2016) 논문 리뷰 (0) | 2024.04.27 |

|---|---|

| ResNet (2016) 논문 리뷰 (0) | 2024.04.15 |

| Fast R-CNN (2015) 논문 리뷰 (0) | 2024.04.14 |

| Show and Tell = Neural Image Caption (NIC) (2014) 모델 간단 리뷰 (0) | 2024.04.13 |

| SPPNet(2014) PyTorch Implementation (0) | 2024.04.12 |